As per O’Reilly’s survey on the state of data quality in 2020, more than 60% of respondents chose data integration issues as a major cause of data quality issues. Today, the key challenge companies face is maintaining the overall health of their existing data.

Certainly, the data quality is deteriorating due to several factors. Be it the age of application, multiple iterations in the data model, or frequent changes in data, these factors are causing the data to become ungoverned and inaccurate. Meanwhile, this blog will help you with one of the data remediation frameworks used by enterprises - through policy!

Data Quality Index reduces with the use of ungoverned data. This happens to such an extent that any data insight is implausible. And stakeholders cannot be sure about taking this data forward to their customers.

Likewise, companies are adopting big data technologies and cloud platforms to get faster and streamlined data access. These migrations are now becoming a major requirement for companies and the key challenge they are facing is the authenticity and accuracy of data.

When it comes to data, enterprises face two major challenges – managing data migration with the availability of incremental data and ensuring data hygiene and governance. Therefore, in this blog, let us find out how to manage these data challenges with policy-based data remediation. In addition, we will also discuss a unique data remediation framework proposed by Xoriant experts for policy-based remediation along with its benefits.

Importance of Data Governance During Data Migration

Enterprises struggle to manage client data that is residing across multiple systems and applications. There are trillions of rows of related data which are available in an isolated state. Enterprises need to collate, further analyze and report this data. In other words, the data must reflect the right information to understand the data and get a historical view and forecast on data trends and business aspects.

Unclean, ungoverned data reduces its value and brings the data potential down. Thus, it is a big ask for companies to gather complete data and cleanse it to enrich it. Data enrichment helps in gaining better insights too. Assessing the potential risks of data management can help enterprises and ISVs to determine the value of the available data.

With the right data remediation framework and best data remediation techniques, enterprises can:

- Ensure compliance with regulatory and legal obligations

- Minimize costs linked with storage footprints

- Identify sensitive data and implement suitable security measures

- Allow the end-user to access meaningful real-time data - appropriately cleansed, sorted, and integrated

Understanding Policy-based Data Remediation

What is Policy-Based Remediation?

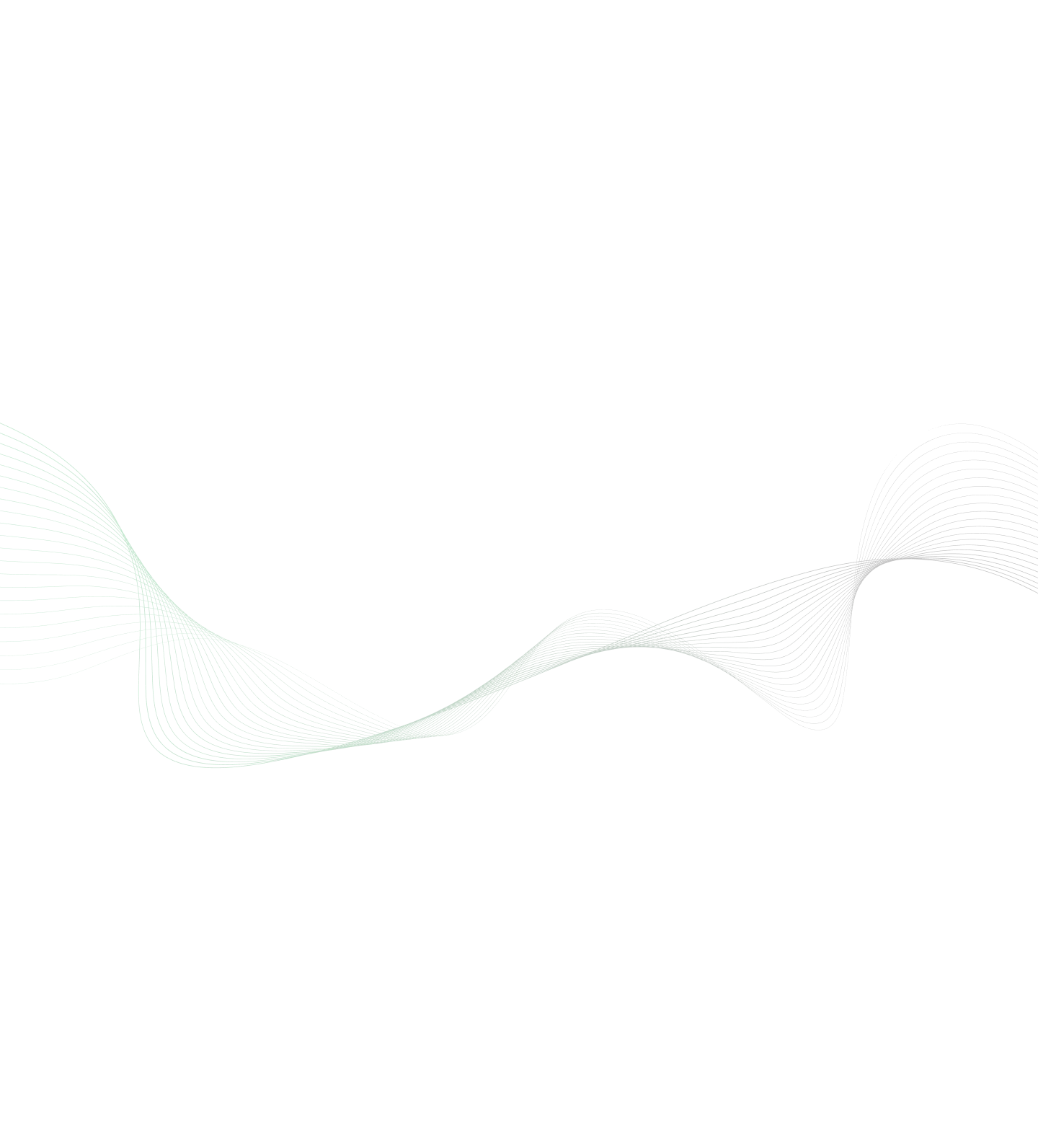

Policy-based implies that companies would set up certain rules/policies such as data validations check rules, data integrity check rules, or even business-driven rules to correct and enrich data quality. Once stakeholders define these policies, they are implemented as rules engines on existing client data to ensure data governance.

So, how does this data remediation framework work? Due to the growing data size in Petabytes and Terabytes, it is a requirement to move data to Big Data or cloud platforms. The companies can cleanse and govern their data after data migration to Big Data or cloud platforms. This is done based on the governing policies defined by the user. Moreover, these policies are generic and can be applied to any data or business user pertinent to their domain.

Exploring Data Remediation Framework for Data Migration

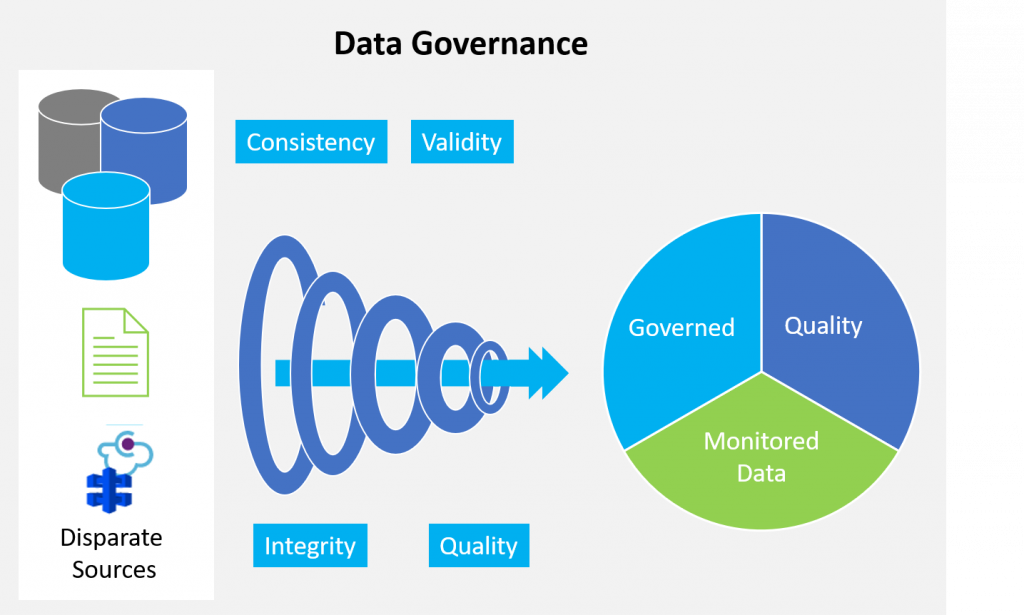

Now, let us look into the details of an effective data remediation approach proposed by Xoriant experts. As per our experts, a data remediation framework should be configurable, injectable, and extensible for the migration of data from traditional disparate legacy systems to Big Data platforms. The framework should have a mature and extensive rule engine to ensure important quality checks on data and governs them during the ETL process into Big Data Lake.

Our experts propose a Big Data solution that works on data collated from different source systems and applies various validation, integrity, and business rules to this data to make it Quality Data. Moreover, this solution has different source connectors which can connect to different sources be it File, Database, or APIs.

As per any traditional ETL, the data must flow through different stages to reach the final target. At each stage of data governance, it is essential to enforce different policies/checks. It requires data cleansing and regularization in phases. The different stages of data remediation can be as follows:

- First Stage: You must apply the schema validation check in this stage of data remediation. In this stage, the framework captures and records any change in schema from the expected one for further correction.

- Second Stage: It is important to reject erroneous and redundant data. In this stage of data remediation, multiple rule engines for integrity and business validation are applied.

- Third Stage: The data reaching this final stage of data remediation in the Big Data Lake is of the utmost quality. This is governed as per the business user governance policies.

Designing Data Remediation Rules

You can define and configure a comprehensive and elaborate integrity rule engine for each table. Each configuration file is separate from the base code. Therefore, this makes it easier for you to configure any schema, table, column combination.

Most importantly, you can club various rules on its type - data validation rules, metadata validation rules, and business rules. Each rule provides flexibility and gives you the flexibility to configure dynamically. You can disable any rule to proceed with processing as per the requirement with the help of a feature.

Now, let’s talk about audit and monitoring. At every stage of the failure of the rule, you can generate and maintain an error and audit record. This is to provide the users with important feedback and an opportunity to nip the issue at the source itself. Above all, key stakeholders can gauge historical and present Data Quality Index with any downstream reporting system.

Data Remediation Framework Benefits for Enterprises

The key benefits of policy-driven data remediation framework:

- The framework is platform-independent and configurable at every stage of data.

- Set rules to the most granular level of schema table and column combination.

- Extend Rules Engine for more support on generic as well as business-specific data to make it more robust.

Policy-based Data Remediation and Governance for a Global Bank

A leading multinational investment bank’s critical inventory and infrastructure data was spread across multiple applications. To clarify, the diffused nature of business resulted in data decentralization, duplication, and integrity issues. Xoriant developed a big data lake data governance solution applying policy-based data remediation process using Apache Spark, Hive, and Java technology stack. Most importantly, the solution benefited the client with 30% reduced technology costs and enhanced governance with a single source of trusted data and business value.

Read the full story on how Xoriant's policy-based data remediation framework helped the multinational investment bank.

In conclusion, you can explore Xoriant Data Governance solutions, services, and offerings.

To discuss suitable data governance techniques for your enterprise scenario,

View Previous Blog

View Previous Blog